Recently, Brimit collaborated with a manufacturer of complex optic devices to develop various software solutions to support the company’s operations. However, the company also aimed to leverage AI models to create virtual representations of their equipment and predict how changes in specific parameters might impact other variables and sales. Due to security concerns, the manufacturer was hesitant to use cloud-based AI and requested an AI model that could be deployed locally.

This raises an important question: What are the key considerations when companies need to choose between cloud-based and edge AI models, and how do they decide which approach will better meet their needs?

Prioritizing and scaling AI benefits

According to a recent Deloitte report, organizations prioritize improved efficiency, productivity, and cost reduction as the primary benefits of generative AI, with 42% of respondents citing these as their most significant gains. However, a larger proportion, 58%, also recognized a broader spectrum of benefits, such as fostering innovation, enhancing products and services, and strengthening customer relationships.

To maximize the value of generative AI initiatives, companies believe it is critical to embed these technologies deeply into core business processes. Despite strong early returns prompting two-thirds of organizations to increase their investments in generative AI, many still need help scaling effectively, with nearly 70% of respondents reporting that less than a third of their AI experiments have been successfully transitioned into production.

How organizations adopt generative AI

A recent McKinsey survey divided organizations deploying generative AI tools into three main approaches: takers, who rely on off-the-shelf, publicly available solutions; shapers, who customize these tools with their proprietary data and systems; and makers, who develop their foundational models from scratch.

While off-the-shelf models meet the needs of many industries, there is a growing trend toward customization and proprietary development, particularly in sectors like:

- Energy—60% of organizations reported significant customization or developed their own model

- Technology—56% reported significant customization or developed their own model

- Telecommunications—54% reported significant customization or developed their own model

About half (53%) of the generative AI applications reported by respondents use off-the-shelf solutions with minimal customization, but a significant portion focuses on tailoring or building models to address specific business challenges.

However, a survey by Gartner revealed that embedding generative AI within existing applications, such as Microsoft Copilot for 365 or Adobe Firefly, is the most common method for leveraging GenAI, with 34% of respondents identifying it as their primary approach. This method is more prevalent than other strategies, including customizing GenAI models through prompt engineering (25%), training or fine-tuning bespoke models (21%), or using standalone tools like ChatGPT or Gemini (19%).

Alexander Sukharevsky, senior partner and global co-leader of QuantumBlack, AI by McKinsey, highlights that GenAI adoption is on the rise, but many organizations remain in the experimentation phase, often relying on simple, off-the-shelf solutions. While common in the early stages of new technology, this approach may fall short as AI becomes more prevalent. To stay competitive, organizations must focus on customization and building unique value. The future will require a blend of proprietary, off-the-shelf, and open-source models, coupled with the right skills, operating models, and data to move from experimentation to scalable impact.

Understanding the trade-offs

The difference between cloud-based and on-prem AI models lies in their deployment strategy, scalability, performance, cost, and control over data.

|

Cloud-based AI |

Local AI |

|

|

Deployment |

|

|

|

Scalability |

|

|

|

Performance |

|

|

|

Costs |

|

|

|

Control over data |

|

|

|

Reliability |

|

|

|

Customization |

|

|

Introducing Llama 3.1: A breakthrough in local AI models

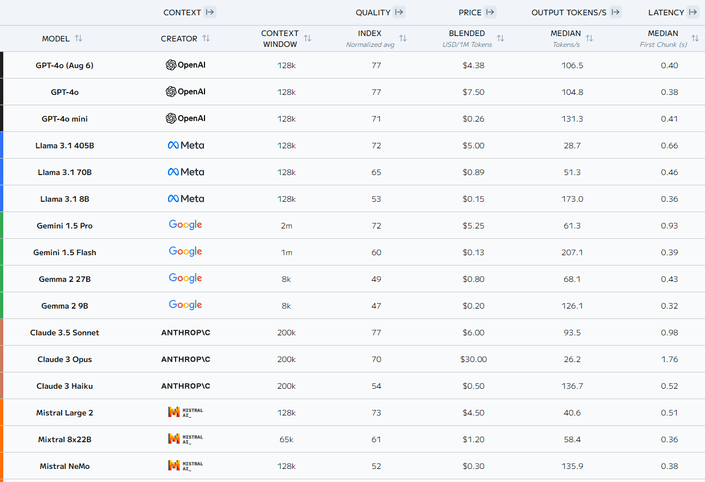

Llama 3.1, the latest iteration of Meta's AI language model, represents a significant step forward in local AI capabilities. Though it is an open-source model, Llama 3.1 surpasses many advanced paid models in quality; and it is specifically designed to be run locally on personal or enterprise-level hardware.

Source: https://artificialanalysis.ai/

Llama 3.1’s importance in the context of local AI lies in its self-learning capability, which encompasses knowledge retrieval, categorization, and learning based on new data it receives. Combined, these techniques enable the model to adapt to changing environments and improve its performance over time. At the same time, Meta emphasizes the importance of data security for companies that use the model and includes components such as Llama Guard and Prompt Guard in the Llama stack.

Another impressive aspect of Llama 3.1 is how it integrates with various platforms and tools. For example, organizations can use Llama 3.1 with Microsoft tools. To do this, they need to deploy the model on their Azure Stack Edge devices or integrate it into their existing Microsoft Azure Machine Learning pipelines. This allows them to leverage Microsoft’s robust AI infrastructure while maintaining the benefits of local deployment. Using Microsoft's development tools, developers can fine-tune and customize Llama 3.1 to align with specific business requirements, ensuring the AI model is both powerful and highly adaptable to their needs.

By combining Llama 3.1 with Microsoft's AI ecosystem, companies can achieve a seamless blend of local control and enterprise-grade capabilities, driving innovation while maintaining the highest standards for data governance.

Balancing control and flexibility

When choosing between local and cloud-based AI models, organizations must consider several factors, including deployment flexibility, scalability, performance, cost, data control, and customization. Each approach has its advantages: Cloud-based AI models provide scalability, lower upfront costs, and managed infrastructure, while local AI models offer greater control over data and the ability to customize solutions to meet specific business needs.

The decision ultimately depends on the organization's priorities, such as data security, cost management, and the ability to tailor AI capabilities. As generative AI technologies continue to evolve, companies may find that a hybrid approach—combining local and cloud-based solutions—offers the optimal balance between control, flexibility, and scalability to achieve their strategic objectives.