Past years have proven that the headless approach becomes popular and evolves rapidly along with a number of technologies that we can use to build and run our front-end part of the solution which is also called “rendering host”. In this article I will primarily write about running a node.js based rendering host in Azure Kubernetes Services (AKS) and in windows based containers specifically.

Working with containerized solutions locally, in Docker, we utilize a simplified way to run our next.js application just mirroring a code stored in our locale into the container. So, during a build, we don’t copy the project code to image and it is not compiled to be run independently. This approach works only locally, where we store the code and run it on the same machine.

This post requires a basic docker knowledge on how to build and compose the application.

Docker-compose.yml and Dockerfile for this setup looks like below:

| version: "2.4" services: # A Windows-based nodejs base image nodejs: image: ${REGISTRY}${COMPOSE_PROJECT_NAME}-nodejs:${VERSION:-latest} build: context: ./docker/build/nodejs args: PARENT_IMAGE: mcr.microsoft.com/windows/servercore:1809 NODEJS_VERSION: ${NODEJS_VERSION} scale: 0 rendering: image: ${REGISTRY}${COMPOSE_PROJECT_NAME}-rendering:${VERSION:-latest} build: context: ./docker/build/rendering target: ${BUILD_CONFIGURATION} args: PARENT_IMAGE: ${REGISTRY}${COMPOSE_PROJECT_NAME}-nodejs:${VERSION:-latest} volumes: - .\src\Project\DemoProject\nextjs:C:\app environment: SITECORE_API_HOST: "http://cd" NEXTJS_DIST_DIR: ".next-container" PUBLIC_URL: "https://${RENDERING_HOST}" JSS_EDITING_SECRET: ${JSS_EDITING_SECRET} depends_on: - cm - nodejs labels: - "traefik.enable=true" - "traefik.http.routers.rendering-secure.entrypoints=websecure" - "traefik.http.routers.rendering-secure.rule=Host(`${RENDERING_HOST}`)" - "traefik.http.routers.rendering-secure.tls=true" |

In the docker-compose file we can see two services required for services (images) to be running in docker:

- nextjs - simple, windows base image where we install node.js server for further running our next.js app.

- rendering - I call it “pseudo-container” because it doesn’t include any code and uses volumes to mirror the code from the host machine to the image.

Dockerfile for nextjs is stored by the ./docker/build/nodejs (as defined in docker-compose) path and looks like below:

|

# escape=` |

rendering Dockerfile is much simpler:

|

# escape=` |

We can see that the rendering Dockerfile doesn’t perform any build and only runs “npm run start:connected” when the container is mounted. But the image that we get as a result can’t be run in Kubernetes. Kubernetes only runs images and doesn’t perform builds. Which means we need to prepare all required images using CI/CD processes and push them to a Container Registry. In this case, we still need a docker-compose file. I usually create it separately for AKS and call it like docker-compose.aks.yml because it is quite specific for AKS:

|

version: "2.4" |

In the code above we can still see two services. nodejs is exactly the same as for local setup and uses the same Dockerfile as I mentioned at the beginning of the article. The rendering Dockerfile, at the same time, represents a multi-stage build of next.js app. During the build we install npm packages, copy node_modules to the image, perform next.js build and, finally, copy next.js artifacts:

| ARG PARENT_IMAGE FROM ${PARENT_IMAGE} as dependencies WORKDIR /app COPY package.json ./ RUN npm install FROM ${PARENT_IMAGE} as builder WORKDIR /app COPY . . COPY --from=dependencies /app/node_modules ./node_modules ENV NEXT_TELEMETRY_DISABLED 1 RUN npm run build FROM ${PARENT_IMAGE} as runner WORKDIR /app ENV NODE_ENV production ENV NEXT_TELEMETRY_DISABLED 1 USER ContainerAdministrator SHELL ["powershell", "-Command", "$ErrorActionPreference = 'Stop'; $ProgressPreference = 'SilentlyContinue';"] COPY --from=builder /app/next.config.js ./next.config.js COPY --from=builder /app/tsconfig.scripts.json ./tsconfig.scripts.json COPY --from=builder /app/tsconfig.json ./tsconfig.json COPY --from=builder /app/public ./public COPY --from=builder /app/.next ./.next COPY --from=builder /app/node_modules ./node_modules COPY --from=builder /app/scripts ./scripts COPY --from=builder /app/src ./src COPY --from=builder /app/package.json ./package.json COPY --from=builder /app/.graphql-let.yml ./.graphql-let.yml RUN Remove-Item -Path C:\app\.next\static -Force -Recurse; EXPOSE 80 EXPOSE 443 ENTRYPOINT "npm run start:production" |

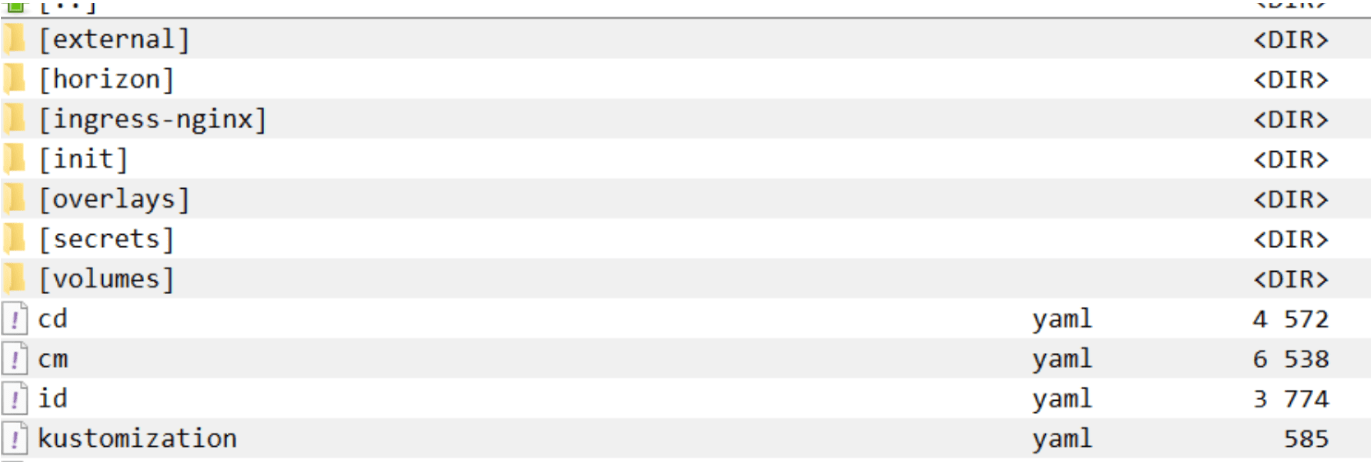

Now we have images ready to be run in Azure Kubernetes Services. In this article I don’t describe how to run the whole Sitecore in Kubernetes. It is pretty well described in Sitecore documentation that you can easily download from the site here. The rest of the article only explains how to add the rendering host to the default k8s specification. If you setup your cluster and deploy Sitecore by its documentation, your k8s specification looks like below:

First thing that we have to do, is to add a new yaml file called “rendering” and put there the following configuration:

| apiVersion: v1 kind: Service metadata: name: rendering spec: selector: app: rendering ports: - protocol: TCP port: 3000 targetPort: 3000 --- apiVersion: apps/v1 kind: Deployment metadata: name: rendering labels: app: rendering spec: replicas: 1 selector: matchLabels: app: rendering template: metadata: labels: app: rendering spec: nodeSelector: kubernetes.io/os: windows containers: - name: [your project name]-rendering image: #{CONTAINER-REGISTRY}##{COMPOSE_PROJECT_NAME}#-rendering ports: - containerPort: 3000 imagePullPolicy: Always env: - name: DEBUG value: sitecore-jss:* - name: SITECORE_API_HOST value: http://cd - name: NEXTJS_DIST_DIR value: .next - name: PUBLIC_URL value: https://{YOUR-RENDERING-HOST-PUBLIC-URL} - name: JSS_EDITING_SECRET Value: {YOUR-JSS-EDITING-SECRET} - name: SITECORE_API_KEY valueFrom: secretKeyRef: name: [your project name]-global key: [your project name]-global-api-key.txt - name: JSS_APP_NAME valueFrom: secretKeyRef: name: [your project name]-global key: [your project name]-global-jss-app-name.txt imagePullSecrets: - name: regcred |

In configuration above we can see the definition of k8s service which is used for communication between environment parties and definition of the rendering deployment which will finally represent our rendering host pod. Take into account that the configuration has environment variables and you need to replace some of them (or all) with your values. As for me, I replace these values during the CI/CD process.

And the last thing left, is to extend our nginx-ingress controller with one additional rule to forward traffic from you host to the rendering host:

| - host: your-host-name.com http: paths: - path: / pathType: Prefix backend: service: name: rendering port: number: 3000 |