From the factory floor to security monitoring, computer vision has become a business imperative. Explore some real-world use cases that show how AI drives smarter quality control, automates complex processes, and delivers measurable ROI across various business tasks.

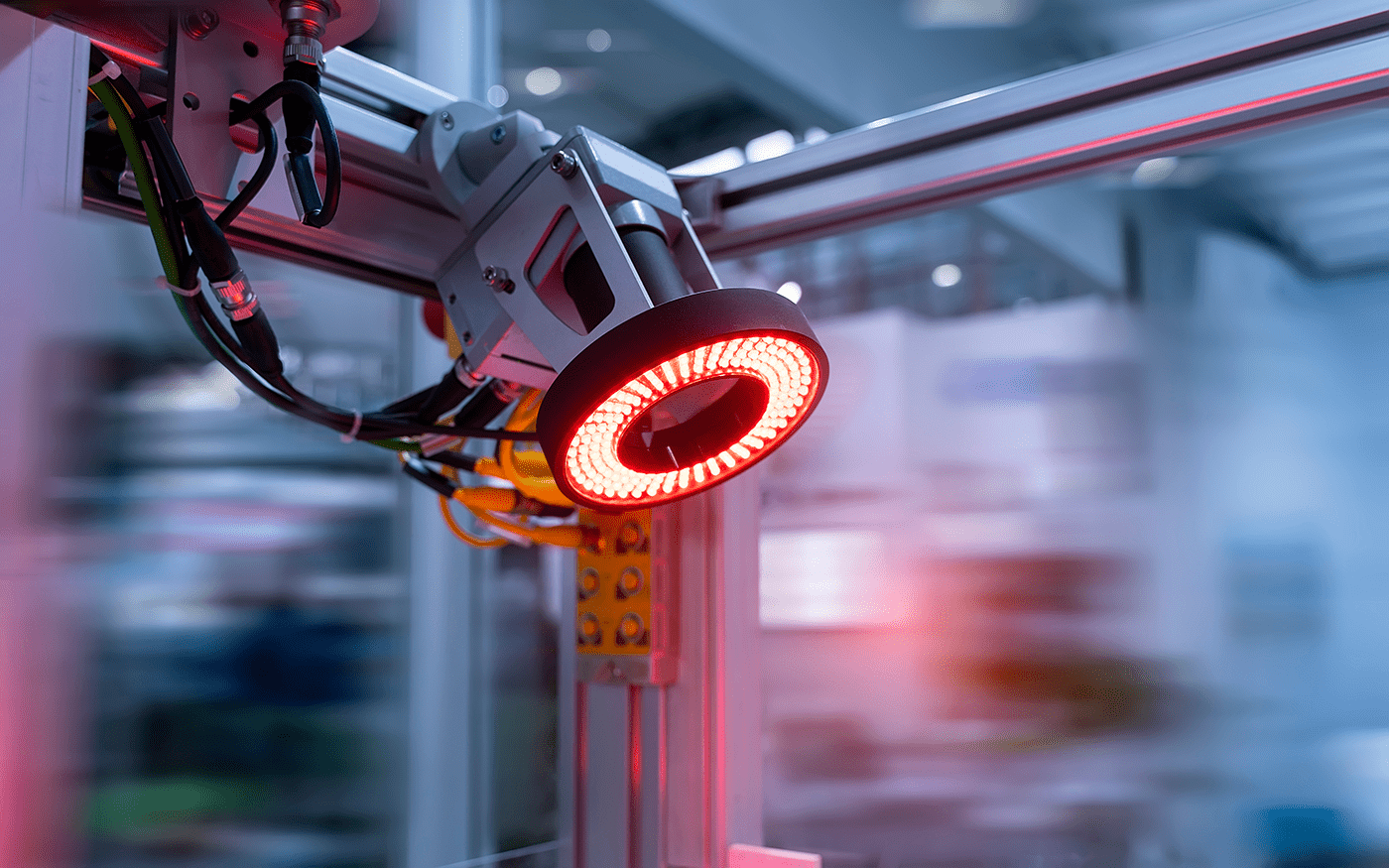

Computer vision (CV) lets manufacturers turn what they see into smarter decisions, allowing them to catch defects faster, streamline operations, and take action before problems start to grow.

AI-powered visual intelligence can predict maintenance, automate inspections, improve safety, and even enhance the customer experience. The payoff comes shortly thereafter in the form of lower costs, higher output, and measurable impact on the bottom line.

CV in action: Real use cases

Want to see how computer vision delivers real impact? We've gathered examples from leading manufacturers using CV to automate inspections, detect defects in real time, and solve complex challenges with measurable results.

Defect detection and maintenance

Automated Rust Detection on Offshore Rigs with Drone-Based Computer Vision

Energy (Oil & Gas) GoalAutomate rust detection on offshore rigs and pipelines to replace time-consuming and hazardous manual corrosion surveys

ProblemSurface corrosion inspections were slow, expensive, and dangerous, requiring engineers to travel to offshore facilities and manually review video footage, which took several months.

SolutionThe company built a computer-vision platform that analyzes drone imagery to automatically detect and measure rust on offshore rigs.

- Drone video streams captured images, cleaned and preprocessed them, and then fed them into a machine learning model trained with weak supervision. This method reduces the need for manual labeling and improves detection accuracy over time.

- The platform links directly to digital twins of the inspected assets, giving engineers a visual, interactive view of rigs, vessels, and storage tanks.

- Teams can now plan inspections ahead of time, focus on high-risk areas, and streamline field operations, saving them time and effort and ensuring that corrosion inspections are as effective as possible.

- Thousands of drone-captured images under varying lighting and weather conditions

- Pixel-level "fuzzy labels" generated through weak supervision to detect rust vs. non-rust

- 3D digital-twin geometry linked to detected rust areas for remediation planning

- 50% reduction in inspection effort, leading to significant cost savings

- Inspection cycle time cut by over 50%, from 3 months to 6 weeks, speeding up maintenance decisions

- Fewer personnel required on site, improving safety and reducing logistics expenses

Hitachi: Automated Defect Detection on Automotive Assembly Lines with 5G and Computer Vision

Automotive Components Manufacturing GoalImprove defect detection and increase throughput on assembly lines with real-time computer vision

ProblemManual inspection on assembly lines was slow, limited throughput, and was prone to errors, allowing defects to pass undetected. Installation of high-resolution cameras for continuous monitoring was complex and time-consuming, requiring weeks of cabling and network configuration.

SolutionHitachi Astemo implemented a private 5G network combined with edge-to-cloud computer vision for real-time defect detection.

- Machine learning models process high-resolution 4K video streams from assembly lines on edge devices for millisecond-level inference, and the cloud is used for continuous model retraining.

- The system inspects multiple components simultaneously, enabling engineers to detect sub-millimeter defects instantly and plan corrective action before quality issues escalate.

- Continuous 4K video streams from 24 assembly components

- Historical labeled images of good vs. defective parts for model training

- 24x increase in inspection throughput: Now the system inspects 24 parts simultaneously vs. one-by-one manual checks

- Detects sub-millimeter defects in real time, allowing corrections to be made earlier and reducing quality-related costs

Smart Defect Detection on Steel Pipes Using Edge AI and Computer Vision

Recycling (Waste Management) GoalImprove defect detection in steel pipes to reduce scrap, warranty risks, and unplanned downtime

ProblemManual inspection of steel pipes often missed small cracks and corrosion, especially on fast-moving lines, resulting in defective products, costly rework, and unplanned downtime. The lab needed a faster, more accurate way to monitor quality.

SolutionThe company implemented a computer-vision system that combines industrial-grade cameras, deep-learning models, and edge-to-cloud computing to automate inspection and logging.

- High-resolution cameras capture images of each pipe on the production line. The images are also stored in the system for further traceability, analysis, and quality improvement.

- A deep-learning model, trained in AWS SageMaker, analyzes these images in real time and detects defects such as cracks, corrosion, and surface irregularities.

- Then, the system logs the type, location, and severity immediately, providing instant alerts so that upstream processes can be adjusted as needed.

- Thousands of labeled images of steel pipes with defects like cracks and corrosion

- Continuous image feed from the production line for real-time inference

- Metadata such as pipe ID, batch, and operator for traceability

- 17% increase in accuracy of defect detection over manual inspection

- 10% reduction in repair-related downtime by detecting defects earlier

- $150,000 annual savings per production line thanks to reduced scrap, rework, and inspection labor

Intel Automates Box Damage Inspection with AI-Powered Mobile App

High-Tech Manufacturing & Logistics GoalSpeed up damage inspections in logistics and reduce errors in identifying damaged cartons

ProblemManual inspection of cartons was slow, inconsistent, and error-prone. Workers had to examine multiple criteria, and expert review was often delayed, creating space bottlenecks, higher costs, and slower claim processing.

SolutionIntel built a computer vision platform to inspect boxes and a mobile app workers can use to take photos, check for damage, and automatically send results to the right people.

- The platform collects and labels sample photos of damaged vs. acceptable boxes using Intel Geti™, a tool for managing image datasets and training computer vision models. Then, it trains a model to detect and classify damage.

- Warehouse workers take photos of suspect boxes with the mobile app, and then the model evaluates damage on-device, generating damage classification and metadata.

- The system further routes images, damage assessments, and alerts via email to quality engineers within seconds, accelerating claim processing and reducing errors.

- The platform stores all images and assessments in a centralized database and uses active learning to incorporate new images for continuous model improvement.

- Sample photos of damaged vs. acceptable cartons to train the model

- Continuous image capture via mobile app to retrain and refine the model

- Box-condition metadata linked to enterprise systems to facilitate claim filing

- 90% reduction in inspection time; damage assessment now happens in seconds

- Faster claim processing and better space utilization thanks to fewer false damage flags

Reducing False Positives by 20%: Computer Vision Empowers Electronics QA

Electronics Manufacturing GoalImprove defect detection efficiency and reduce the number of false positives in electronics assembly without slowing throughput

ProblemManual visual inspection of tiny electronic components and dense circuit boards was slow, inconsistent, and prone to errors. Tiny components and dense boards were hard for humans to inspect, and traditional optical inspection machines often triggered false positives, slowing down the production process.

SolutionIBM deployed a deep-learning computer-vision toolkit alongside existing rule-based automated inspections to detect defects in real time across global manufacturing sites.

- When a defect appears on the production line, operators get alerts, and cameras automatically capture images of it. The platform then analyzes the damages in real time with deep-learning and rule-based models so engineers can address the issues immediately.

- Line technicians can also use built-in labeling and model retraining tools to quickly label new defects or products and update the models, keeping the system up to date.

- The platform stores all images, analysis results, and model outputs centrally, providing a complete record for historical tracking, continuous improvement, and performance monitoring.

- Labeled image sets of each product family showing non-defective parts and known defects

- Continuous image streams from cameras at inspection stations for real-time inference

- Operator feedback metadata to improve model accuracy

- 5x improvement in inspection efficiency

- 20% reduction in false positives

- Faster defect detection, leading to improved throughput and reduced inspection costs

Computer Vision Transforming Quality Control in Furniture Production

Wood Furniture Manufacturing GoalAchieve near-perfect defect detection at line speed in wood panel production to eliminate defects before final assembly

ProblemManual sorting of PET bottles was slow, inconsistent, and physically demanding, limiting throughput and the purity of recycled material. Rising volumes—over two billion bottles annually—and staffing shortages made it increasingly difficult for Evergreen to meet production goals and maintain high-quality food-grade rPET standards.

SolutionThe company developed a custom computer-vision system to detect wood panel defects immediately after the gluing step.

- High-resolution cameras and custom lighting capture every panel under production conditions.

- Deep-learning models are trained on labeled images of good vs. defective panels, including cracks, knots, and glue gaps.

- Real-time integration with PLCs automatically diverts defective panels, logs defect type and location, and associates each defect with its production batch for root-cause analysis.

- Cloud connectivity and dashboard monitoring make it possible to remotely configure, analyze, and improve models without interrupting production.

- The operator feedback loop allows new defect types to be labeled and enables quick model retraining to meet evolving production needs.

- Thousands of labeled images of hardwood panels showing typical defects (e.g., cracks, knots, glue gaps)

- A continuous stream of images of panels in production for real-time inference

- Production metadata linked to each image to provide downstream analytics

- 90% improvement in defect detection versus manual inspection

- Zero defective panels pass to final assembly, resulting in a 0% customer return rate

- 100% automatic inspection at line speed, eliminating manual QC labor and associated subjectivity

Understand where to start with data and AI

Automation and robotics

Streamlined PET Bottle Sorting for Higher Throughput and Purity

Recycling (Waste Management) GoalIncrease throughput and reduce labor costs by automating the sorting process in the PET bottle recycling operation

ProblemManual sorting of PET bottles was slow, inconsistent, and physically demanding, limiting throughput and the purity of recycled material. Rising volumes—over two billion bottles annually—and staffing shortages made it increasingly difficult for Evergreen to meet production goals and maintain high-quality food-grade rPET standards.

SolutionThe company deployed six AI-guided robotic sorters equipped with high-resolution cameras.

- These robots use computer vision and deep-learning models to continuously identify and separate clear PET bottles from mixed streams.

- The system learns from thousands of labeled images and real-time line-side video, while ongoing performance data refines sorting accuracy.

- The robots integrate seamlessly into the 24/7 production environment without requiring additional maintenance staff.

- Thousands of high-resolution images of clear, green, and opaque PET bottles for training

- Continuous video streams from line-side cameras for real-time inference

- Ongoing pick rate and contamination data to refine the model

- 200% increase in capture rate, recovering 3x more clear PET bottles than manual sorting

- Picking speed increased to 120 bottles per minute, up from 30-40 by human sorters

- 90% reduction in contamination, delivering higher-purity recycled material for food-grade rPET

Customer experience and claims processing

The Insurer Cuts Claim Cycle to Minutes with AI Auto Damage Detection

Insurance GoalAutomate vehicle damage claims processing and improve customer experience with a fully digital, touchless journey

ProblemManual review of vehicle damage photos overloaded appraisers, leading to slow claim cycles, inconsistent estimates, and poor customer experience. The process required customers to send images by email or other channels, while appraisers had to manually analyze every claim, which caused lots of errors and was time-consuming.

SolutionThe company deployed a deep-learning visual AI platform that analyzes vehicle photos to automatically detect damage, classify severity, and generate repair cost estimates.

- The platform integrates the first notice of loss (FNOL) process and, when a claim is submitted, sends a web or SMS link so customers can capture and upload 5–10 photos of the damaged vehicle directly through the app.

- Deep-learning models trained on millions of vehicle-damage images then automatically detect damaged parts, classify the type of damage (scratches, dents, broken glass, etc.), and estimate severity.

- The system converts detected vehicle damage into repair cost estimates using a parts-pricing database. The platform automatically processes claims when they exceed the AI confidence threshold or routes lower-confidence claims to a human adjuster for review.

- Millions of annotated images of vehicle damage from global archives and historical claims

- Up to 10 new smartphone photos per claim for real-time inference

- A parts-pricing database to convert damage into cost estimates

- 90% of claims processed without human appraisers

- 98% of qualifying claims completed in less than 15 minutes, compared to days in the past

- 96% accuracy of AI-generated estimates compared to expert adjusters

- 3x increase in ROI with 70–75% customer uptake of the AI web app

Security and surveillance

Scaling Video Surveillance 2× Faster with Computer Vision and Cloud Computing

Security and Surveillance GoalUse computer vision to scale real-time video surveillance and threat detection across thousands of video streams

ProblemLegacy cloud infrastructure could not scale effectively to handle the massive amounts of data generated by real-time video streams. Filtering false alarms for thousands of customers was cost-prohibitive, and the infrastructure was too slow to support rapid expansion.

SolutionThe company migrated its video analytics stack to Google Cloud, using Kubernetes Engine and NVIDIA Triton inference servers to process up to 5,000 video streams simultaneously.

- The video-processing platform uses deep-learning models to detect objects, track activity, and generate alerts in real time across thousands of camera streams.

- Kubeflow and CI/CD pipelines automate model training and updates, continuously refining models using historical labeled video clips and live streaming metadata.

- Videos, metadata, and alerts are stored in cloud storage and distributed via Cloud Load Balancing, with serverless event processing to provide real-time notifications and scalable operations.

- GPU allocation and container orchestration ensure the models run efficiently without latency, minimizing processing costs.

- Live security video from up to 5,000 camera streams for real-time inference

- Historical labeled video clips for training deep-learning models

- Metadata (e.g., object tracks, alert types) for further model refinement

- Up to 5,000 video streams can be processed at once without latency

- 60% reduction in GPU processing costs compared to the previous cloud provider

- 2x faster development and deployment cycles with automated CI/CD on GKE

Imagery analytics

EagleView: Fast, Cost-Efficient Computer Vision and AI for Aerial Imagery

Geospatial Imagery & Analytics GoalImprove image processing speed and reduce costs for large-scale geospatial imagery analysis

ProblemEagleView's legacy system couldn't keep up with processing 1 billion+ aerial images. Some large batches took up to 16 hours to process, causing the company to miss SLA deadlines and limiting scalability.

SolutionThe company migrated its machine learning pipelines to Amazon SageMaker using NVIDIA Triton inference servers.

- The system automatically ingests and processes images using deep-learning models alongside existing proprietary algorithms. Asynchronous inference and managed scaling ensure endpoints can handle thousands of requests while automatically scaling to zero when idle, cutting idle GPU costs.

- Integration with SageMaker and AWS services enables near-real-time analysis, allowing the platform to extract property attributes, detect structures, and deliver insights within the strict 2-minute SLA.

- Historical labeled imagery supports continuous model refinement, while processed images, results, and metadata are stored centrally for monitoring, auditing, and further training.

- NVIDIA Triton inference servers accelerate processing, while SageMaker handles deployment, scaling, and monitoring. This setup helps the company process massive volumes of ultra-high-resolution imagery efficiently, cut costs, and maintain reliable performance at scale.

- Continuous ingestion of ultra-high-resolution aerial images

- Historical labeled imagery for training object-detection models

- Real-time image batches for time-sensitive use cases

- 90% reduction in processing time, from 16 hours to 1.5 hours per 1,000 square miles

- 40–50% reduction in GPU compute costs

Keep exploring: More resources on data-driven manufacturing

Making your manufacturing operations smarter with data and AI is a journey. Each step brings new clarity, from understanding what's possible to assessing readiness and implementing solutions that deliver real results.

No matter where you are today, we've built resources to guide you:

Want to explore more AI applications?

Discover 20+ data and AI use cases across quality control, supply chain, production floor, and document processing.

Not sure if your data is AI-ready?

Use our four-pillar assessment framework to evaluate availability, quality, integration, and governance.

Considering real-time analytics?

Read our decision playbook to determine when real-time delivers ROI and when batch processing is enough.