The reality: Most manufacturing data isn't there yet

Across the manufacturing industry, factories are moving faster, optimizing smarter, and delivering better, and some of them with the help of AI.

But while AI promises big wins, such as shorter time to market, higher product quality, and fewer mishaps on the production floor, there's one hard truth many solution providers won't mention:

For many manufacturers, the journey toward AI starts with the day-to-day tools and systems that have quietly held things together for years. Often, that means aging spreadsheets, legacy ERP systems, and even manual processes still recorded on paper.

One in five manufacturers considers themselves data-ready. That means the majority are still working through foundational challenges, trying to move forward while dealing with systems that were not built for the demands of today's fast-paced, data-driven environment.

It's a familiar picture, and one we hear time and again:

"Our factory still runs on spreadsheets from 30 years ago."

"Updating our ERP system is so complex that it rarely happens."

"We've experimented with AI-powered vision systems, but results have been mixed."

"AI seems promising, but can anyone show us a use case that actually fits our reality?"

The good news is that you don't need a complete digital transformation on day one. Becoming AI-ready starts with a clear focus. First, define the business outcomes you want to achieve. Then, evaluate whether your data is ready to support them.

This guide will walk you through how to assess and prepare your manufacturing data for AI adoption, drawing on real-world feedback, proven frameworks, and Brimit's hands-on experience working with manufacturers like you.

Understand where to start with data and AI

Start with the right goal: AI readiness for what?

Before asking "Is my data ready for AI?", ask this:

Examples:

- Reduce downtime by 20%

- Optimize maintenance schedules

- Forecast production demand with 95% accuracy

- Cut waste from quality defects by 15%

Defining a clear goal helps you evaluate whether your data is fit for that purpose.

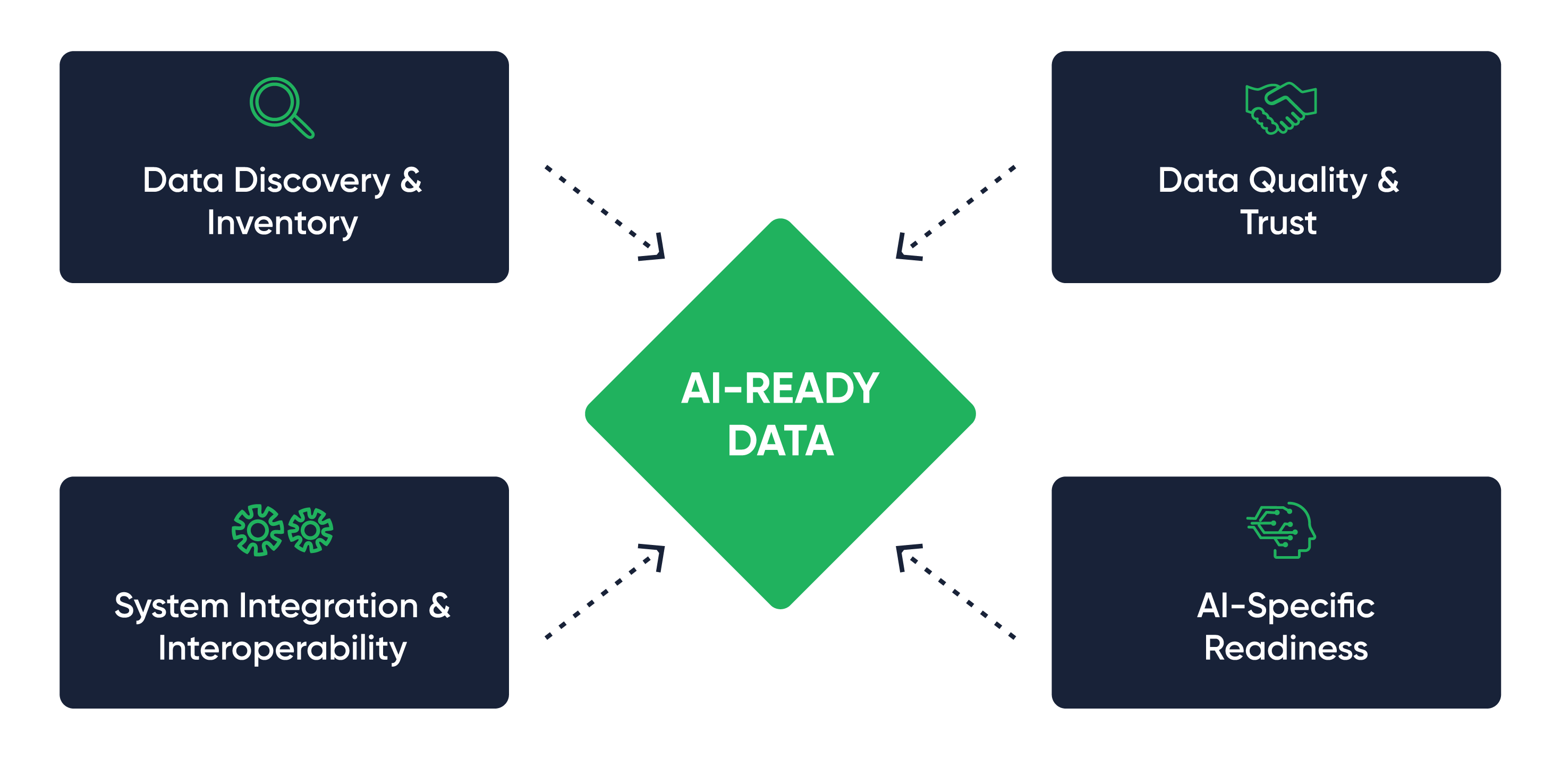

Four pillars of data readiness

At Brimit, we created an assessment framework to evaluate your organization across four critical dimensions. Each pillar builds upon the previous one, creating a foundation for sustainable AI success. To help you evaluate where you stand today, each pillar includes a simple self-assessment checklist. For each item, ask: Is this true for my organization today?

1. Data discovery and inventory

Many manufacturers discover that critical predictive data sits in Excel files on engineers' laptops rather than in accessible systems. This represents both risk and a lost opportunity. A comprehensive inventory of your data assets and their locations is the foundation of any successful AI initiative.

Assessment checklist:

Data visibility and mapping

- We've identified all major data sources used in operations, maintenance, quality assurance, and logistics.

- Our systems include MES, ERP, SCADA, CMMS/EAM, WMS, and LIMS.

- We've documented where each data source is stored (on-premise, cloud, file-based).

- We've inventoried and categorized all unstructured data (e.g., PDFs, spreadsheets, operator logs).

Data accessibility and ownership

- Data from each system can be exported or accessed via APIs.

- We understand whether data is available in real time or in batches.

- Access rights and responsibilities for each dataset are clearly defined.

- Our company has basic metadata and consistent naming conventions for datasets.

- We follow formal data retention and archival policies.

REAL-WORLD SPOTLIGHT

Digitizing scientific data for AI readiness

A leading manufacturer of cell and gene therapies relied on spreadsheets and paper records to track critical experiment and batch data. This manual approach made it difficult to ensure data integrity, limited scalability, and left valuable insights locked in inaccessible formats.

Solution: To modernize their data foundation, the team integrated their laboratory information management system (LIMS) with a cloud-based scientific data platform. They established bidirectional data flows via APIs, allowing experiment and batch metadata to pass from the LIMS into the cloud environment for processing, and return enriched, structured data for scientific review.

The system is connected directly to lab instruments, such as plate readers, PCR systems, and balances, automating the ingestion of raw data streams. It enriched this data with contextual metadata, normalized it into an open, analytics-ready format, and made it accessible for downstream AI and analytics workflows.

Outcome: With this foundation in place, scientists shifted from manual transcription to working with high-integrity, traceable datasets, laying the groundwork for future AI-driven initiatives like anomaly detection, root cause analysis, and process optimization.

2. Data quality and trust

The ability to trust your data for critical business decisions is paramount. If AI for quality control is trained on data from miscalibrated sensors, it will incorrectly reject good products or pass defective ones. Poor data quality directly impacts your bottom line through false positives and missed defects. Every data quality issue multiplies when fed into AI systems, turning minor inaccuracies into major operational problems.

Assessment checklist:

Data accuracy and reliability

- Key sensors are regularly calibrated and validated against physical measurements.

- We use cross-validation to ensure consistency between redundant sensors.

- We track data drift or changes in accuracy over time.

Data completeness

- Our datasets include all relevant fields, with no major gaps or missing values.

- We have enough time-series data to represent full production cycles.

- Edge cases and exceptions are captured in our datasets.

Data timeliness and consistency

- Data arrives fast enough to support decision-making.

- We understand where delays occur in data collection or transmission.

- Measurements follow standard units, date formats, and naming structures across systems.

REAL-WORLD SPOTLIGHT

Raising data integrity for visual AI

Hitachi set out to automate quality inspections for processes like electric wire crimping, where precision and consistency are critical. However, their plant data was fragmented across PLCs, SCADA, MES, and various IoT systems, all producing different data types (numeric, discrete, text, and image) in inconsistent formats. This lack of standardization made it nearly impossible to use the data reliably for AI.

Solution: They implemented the Manufacturing Data Engine on Google Cloud, creating a standardized pipeline that connected over 250 different PLC protocols through industrial edge computers. The data pipeline securely streamed plant-floor signals via OPC UA, Pub/Sub, and Dataflow, transforming them into a unified, clean format for analytics and machine learning.

The team stored cleaned datasets in Cloud Storage, BigQuery, and Cloud Bigtable, using schema mapping to harmonize data across multiple factories. AI models were trained using Vertex AI for visual inspection tasks, such as identifying defective crimps. The engineers also containerized the models and deployed them back to the shop floor using industrial edge devices for real-time inference.

Outcome: The company enabled real-time defect detection, improved quality consistency, and eliminated manual inspection errors. The standardized data pipeline now supports AI reuse across other processes, accelerating digital transformation at scale.

3. System integration and interoperability

The next generation of manufacturing data platforms must bridge two traditionally separate worlds: information technology (IT) and operational technology (OT). Siloed systems require expensive manual intervention and create dangerous blind spots. Without proper integration, your AI initiatives will struggle to access the comprehensive data they need to deliver value.

Assessment checklist:

Data integration analysis

- We've mapped out how data flows between systems (ERP, MES, SCADA, etc.).

- We've identified manual workarounds or file-based processes that could be automated.

- Known bottlenecks or data silos are documented and tracked.

API and streaming readiness

- Key systems offer modern APIs for data extraction.

- Legacy systems are either integrated, or we have a clear plan for modernization.

- We support streaming or near-real-time data transfer where needed.

Stability of architecture

- We've reviewed the cost and complexity of integrating vs. replacing outdated systems.

- Our current infrastructure can scale with additional data sources.

- We've assessed and documented technical debt.

REAL-WORLD SPOTLIGHT

Breaking down data silos to unlock predictive insights

A German industrial equipment manufacturer was dealing with sensor data spread across different systems, formats, and machines. Because the data fragmentation and inconsistency, their teams couldn't spot early signs of failure and had to rely on reactive maintenance when things broke down. They needed a single stream of data, integrated into a system that worked end to end.

Solution: The company built a cloud-based predictive maintenance system on Microsoft Azure. The team connected industrial machines to Azure IoT Hub, streaming live sensor data into Azure Data Lake for centralized storage and management. Using Azure Databricks, they cleaned, aligned, and transformed the data into machine learning features.

With Azure Machine Learning, the team trained predictive models on historical failure and maintenance records to detect early signs of equipment issues. Azure Data Factory handled data orchestration and automated model retraining, while Power BI dashboards delivered real-time health scores and anomaly alerts to maintenance teams.

Outcome: The manufacturer transitioned from reactive to predictive maintenance, achieving a 40% increase in equipment uptime, 25% reduction in maintenance costs, and 35% faster anomaly detection. Most importantly, they created a scalable, integrated data platform that could support future AI use cases across production lines.

4. AI-specific readiness

Having data isn't enough. It must be structured and rich enough to train effective AI models. AI models require specific data characteristics that go beyond traditional analytics requirements. Without proper historical depth and labeled examples, your AI investments will fail to deliver ROI. This pillar assesses whether your data meets the unique demands of machine learning and AI applications.

Assessment checklist:

Data format and structure

- Data is stored in structured formats (CSV, databases) when possible.

- Unstructured data is organized, labeled, and stored in accessible repositories.

- We've prepared or planned for preprocessing pipelines to clean incoming data.

Depth of historical data

- We have sufficient historical data to represent normal operations and anomalies.

- Our data spans seasonal shifts, product variants, and changing conditions.

- Production events (e.g., downtime, quality issues) are timestamped and well-documented.

Labeling and training scenario coverage

- We've labeled datasets for defect classification, root causes, or maintenance triggers.

- Failure logs are matched with outcomes (e.g., fix time, impact).

- Our datasets represent different shifts, lines, product SKUs, and machine configurations.

REAL-WORLD SPOTLIGHT

Preparing image data for machine learning

Subaru was working with large volumes of annotated image data from its in-vehicle camera systems, aiming to train advanced vision models. But they faced a major bottleneck: transforming that raw image data into usable training datasets (e.g., TFRecords for TensorFlow) took over 24 hours per batch. This slowed development cycles and made AI experimentation painfully inefficient. The legacy, on-premise preprocessing setup involved manual steps, inconsistent formats, and lacked scalable infrastructure, forcing engineers to spend more time preparing data than building models.

Solution: The company moved preprocessing to Google Cloud Dataflow, a scalable data pipeline engine. This allowed Subaru to automate and accelerate the transformation process, cut prep time from over 24 hours to just 30 minutes, and standardize how image data flowed through their AI workflows.

Outcome: The company achieved AI readiness by automating, scaling, and standardizing data preparation to deliver high-quality, consistent inputs for machine learning. With this foundation in place, Subaru could efficiently process large image datasets while maintaining flexibility, keeping core data on-premises and leveraging the cloud where it added speed and scalability.

Practical steps to ensure data readiness

Knowing where you stand is only the first step. Below is a practical roadmap to help you move from scattered, siloed data to an AI-ready foundation.

Step 1: Fix the data availability problem

- Map your current data sources and systems

- Identify data owners across departments

- Install sensors (IIoT or traditional)

- Build soft sensors from process models

- Digitize paper records

- Use lab analysis with timestamped data

Step 2: Improve data quality and structure

- Classify and tag data

- Apply metadata (e.g., machine ID, batch #)

- Automate data cleaning with AI-assisted tools

- Validate sensor accuracy regularly

- Monitor data drift and anomalies

Step 3: Make hidden data accessible

- Create a central OT/IT data platform

- Enable real-time data pipelines

- Use edge-to-cloud architectures

- Ensure systems offer API or export capabilities

Step 4: Prepare your organization

- Assign a data steward or data manager

- Upskill your team in data literacy

- Label past events and outcomes to build training datasets

- Pilot AI on one production line first, and then scale

Keep exploring: More resources on data-driven manufacturing

Making your manufacturing operations smarter with data and AI is a journey. Each step brings new clarity, from understanding what's possible to assessing readiness and implementing solutions that deliver real results.

No matter where you are today, we've built resources to guide you:

Want to see what's possible with AI?

Explore 20+ real-world use cases showing how manufacturers use data and AI to innovate and cut costs.

Want to see computer vision in action?

Explore real-world computer vision use cases in the areas of quality inspection, safety monitoring, and process optimization.

Considering real-time analytics?

Read our decision playbook to determine when real-time delivers ROI and when batch processing is enough.