Configuring the Sitecore Search API Crawler to Retrieve Data from XMCloud Edge

In this article, I will guide you through the process of setting up an API crawler to transfer data from XMCloud Edge to Sitecore Search. The first step involves determining the specific data you wish to index in Sitecore Search. You have the option to either create a new Sitecore Search entity or leverage the default Content entity. For detailed instructions on creating a custom entity, please refer to my previous article available here.

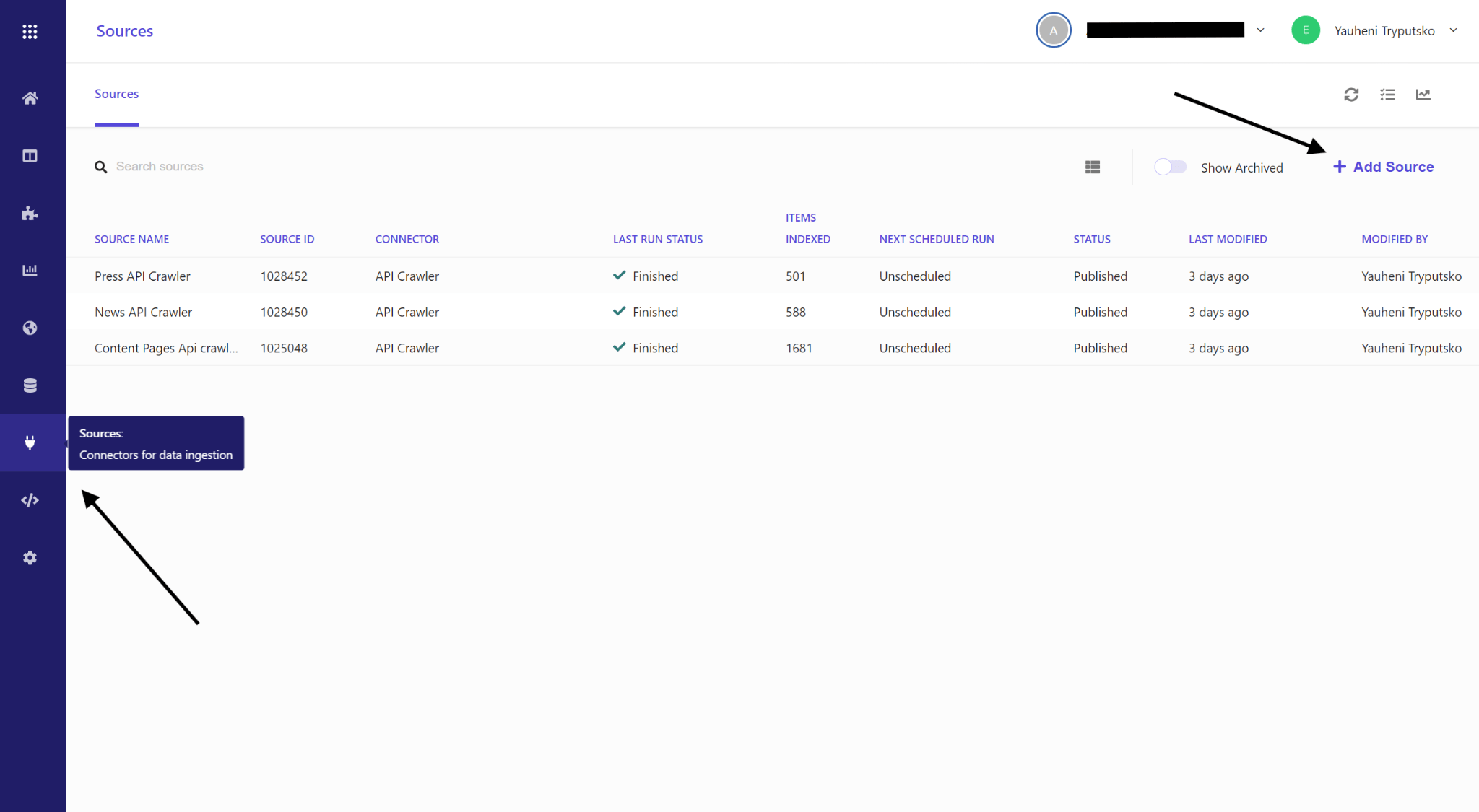

To configure an API crawler source, navigate to the "Sources" section in the menu and select the "Add Source" button.

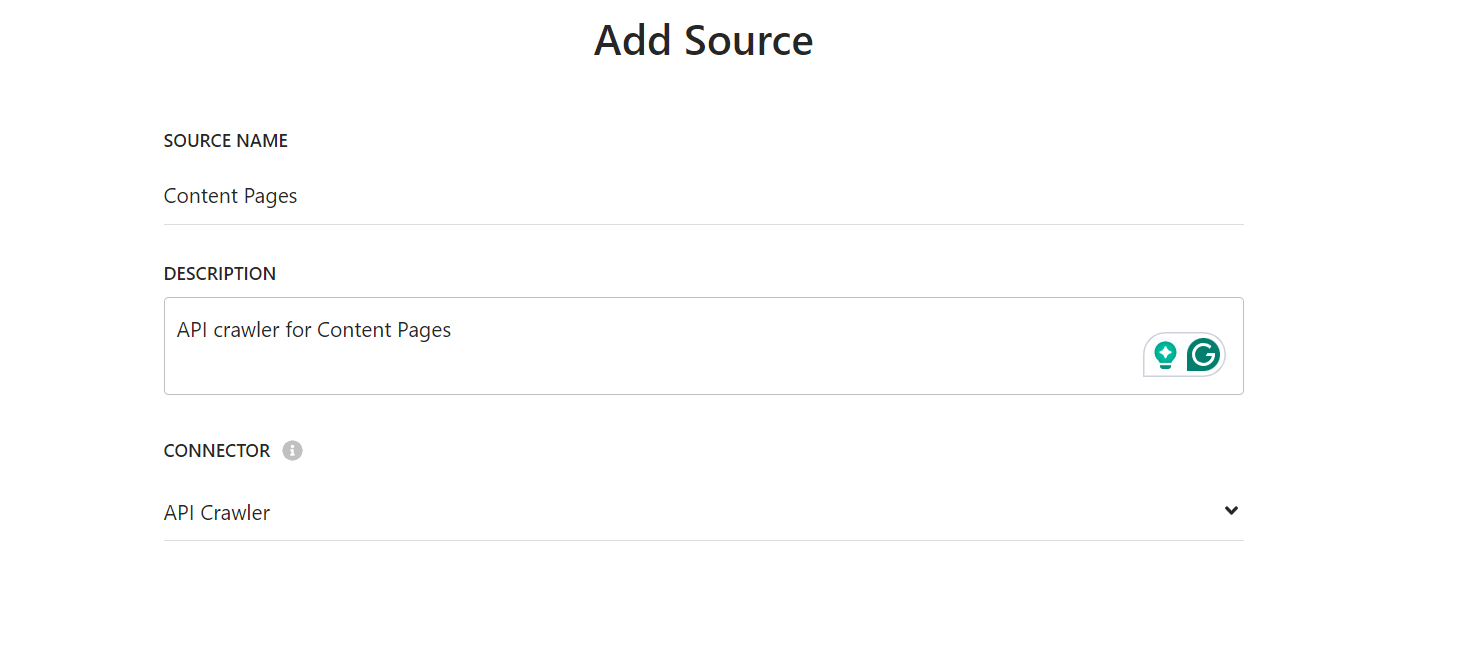

Upon clicking "Add Source," a popup window will appear. Here, you need to configure three fields: Source Name, Description, and Connector. The Connector field is a dropdown menu where you must select "API Crawler." After configuring all the fields, click the "Save" button to create a new API crawler source.

Once the Source is created, you will be redirected to the API crawler source settings page. This page comprises various configuration sections, including Source Information, General, API Crawler Settings, Available Locales, Tags Definition, Triggers, Document Extractors, Authentication, Request Extractors, Crawler Schedule, and Incremental Updates. Detailed descriptions of each section can be found in the Sitecore Search documentation. In this article, I will share my hands-on experience in setting up an API crawler and extracting data from XMCloud Edge.

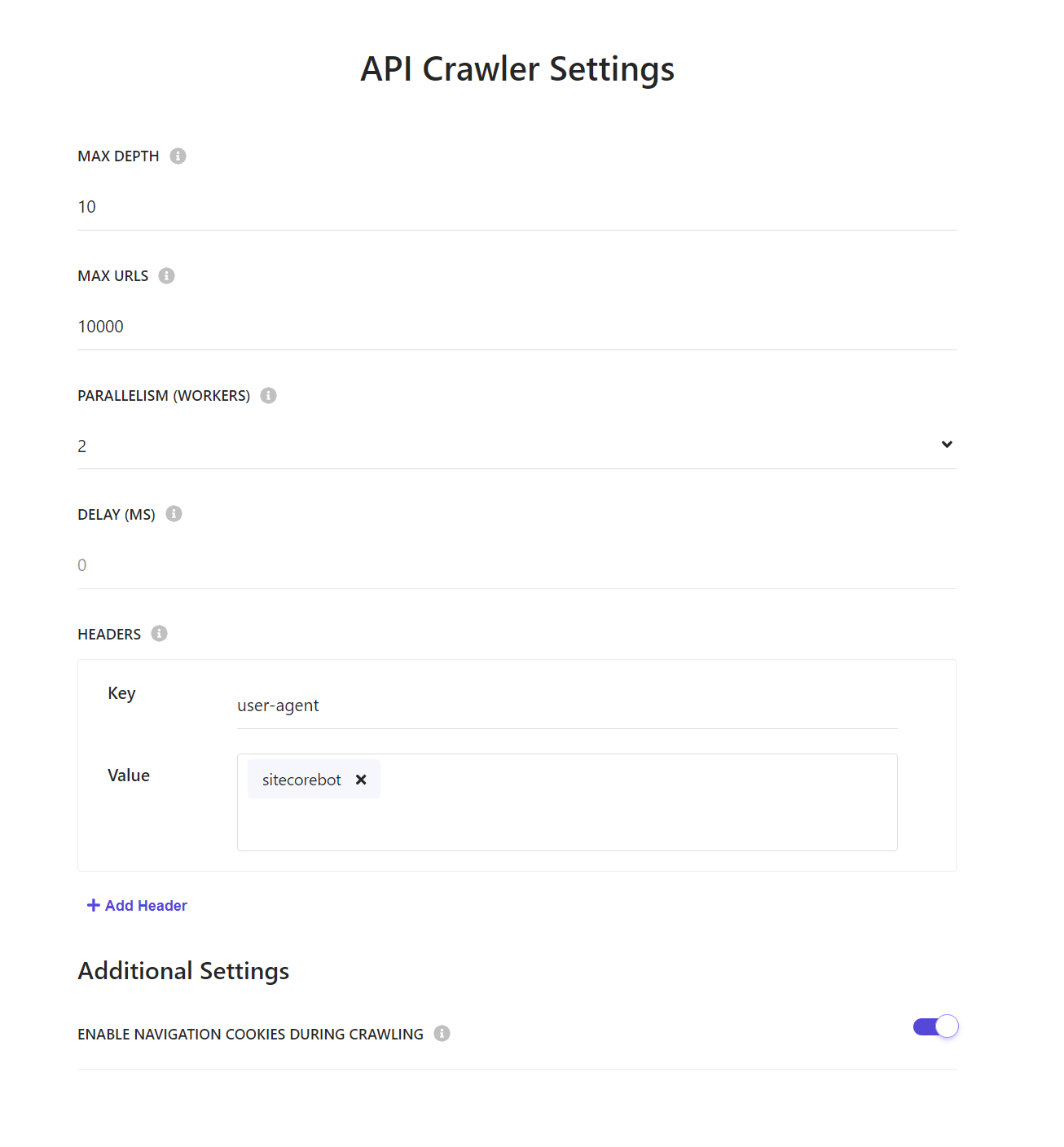

Let’s review the steps I followed to configure the API crawler. The initial step involves setting up the API crawler settings, which include the following parameters: MAX DEPTH, MAX URLS, PARALLELISM (WORKERS), DELAY (MS), HEADERS, and Additional Settings.

For my use case, the primary setting is MAX DEPTH. I configured this value to 10, indicating that the crawler will traverse up to 10 levels deep in the child item hierarchy when generating page detail queries. This value should be adjusted based on the depth of the child items in your Sitecore content tree. While a value of 5 might suffice for many projects, I opted for 10 due to the intricate structure of my Sitecore implementation.

I set PARALLELISM to 2, although the default is 5. You may retain the default value if it suits your needs. This setting specifies the number of concurrent threads or workers for crawling and indexing content. The maximum permissible value is 10, but selecting the maximum might slow down the process for complex crawler logic. Even with 2 threads, the crawler processed 1600 URLs in approximately 15 minutes, which I consider efficient. I plan to experiment with different values to optimize performance further.

Furthermore, I configured the header as "user-agent: sitecorebot," as recommended in the documentation. However, it appears that this header is not mandatory. Other settings I leave by default.

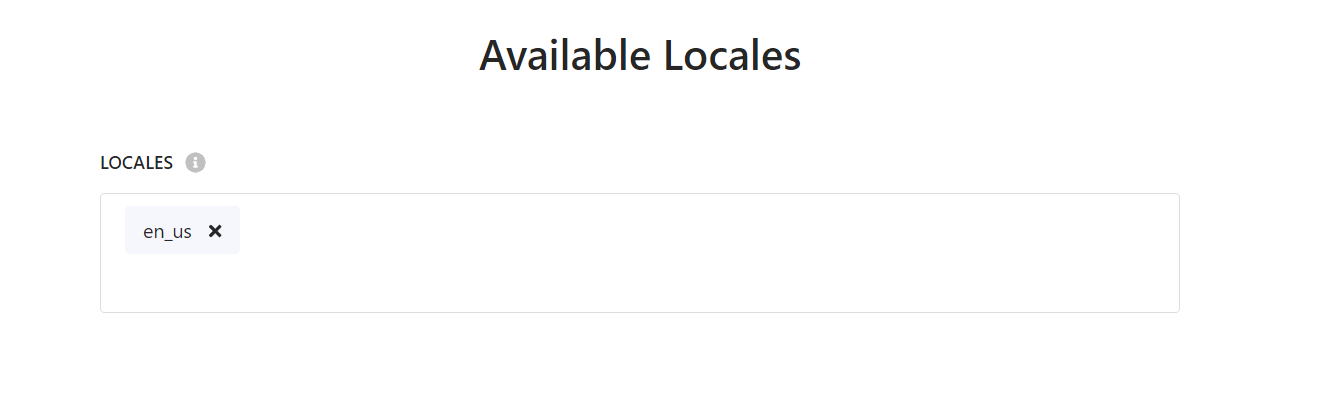

The next configuration step involves setting the Available Locales. This setting specifies the locales for which the crawler will be configured. If you are dealing with multiple locales, you should create specific Request Extractors and Document Extractors for each locale. Detailed instructions can be found in the Sitecore Documentation: Configuring a Crawler to Crawl Localized Content. However, from my experience, it is often more straightforward to create a separate crawler for each locale.

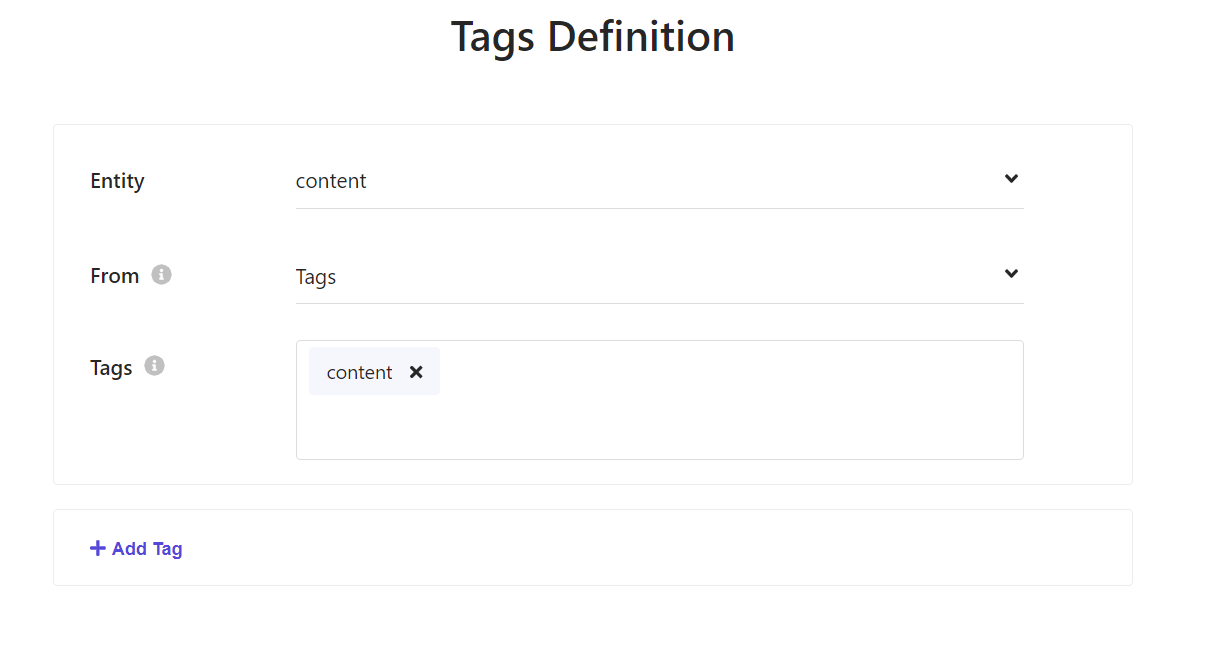

The next configuration step involves the Tags Definition setting. This setting determines which entity will be used to create items in Sitecore Search. By default, all entities are selected, but this is often unnecessary for most use cases. Typically, you will select only one entity. However, if your project necessitates creating items for multiple entities, you can configure two or more tags definitions accordingly.

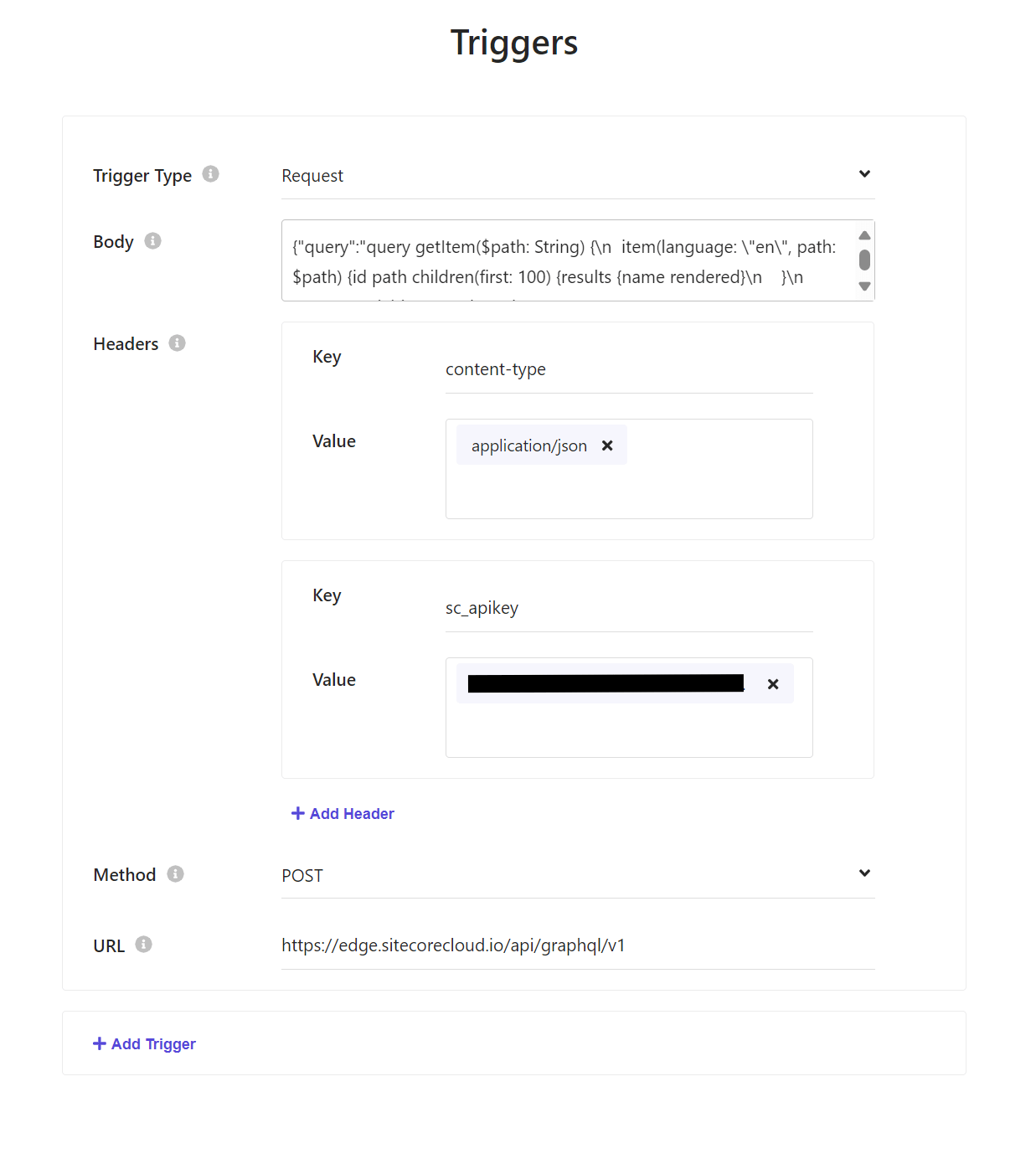

Let’s move on to an important setting—Triggers. A trigger is the initial point that a Sitecore Search crawler uses to locate content for indexing. Depending on its type, a trigger can either contain a comprehensive list of URLs to crawl (when using a sitemap or sitemap index trigger) or serve as a starting point for further actions (when using a request or JavaScript trigger).

To transfer data from XMCloud Edge, you need to select the trigger type as "request" and configure a POST query to https://edge.sitecorecloud.io/api/graphql/v1. For the query to function correctly, you must also configure the headers: content-type and sc_apikey. The body should include a query that retrieves the start item and its child names. These child names will be used to generate detail page queries. Here is an example of the body:

{"query":"query getItem($path: String) {\n item(language: \"en\", path: $path) {id path children(first: 100) {results {name rendered}\n }\n }\n}\n","variables":{"path":"/sitecore/content/ALIA/corporate"}}

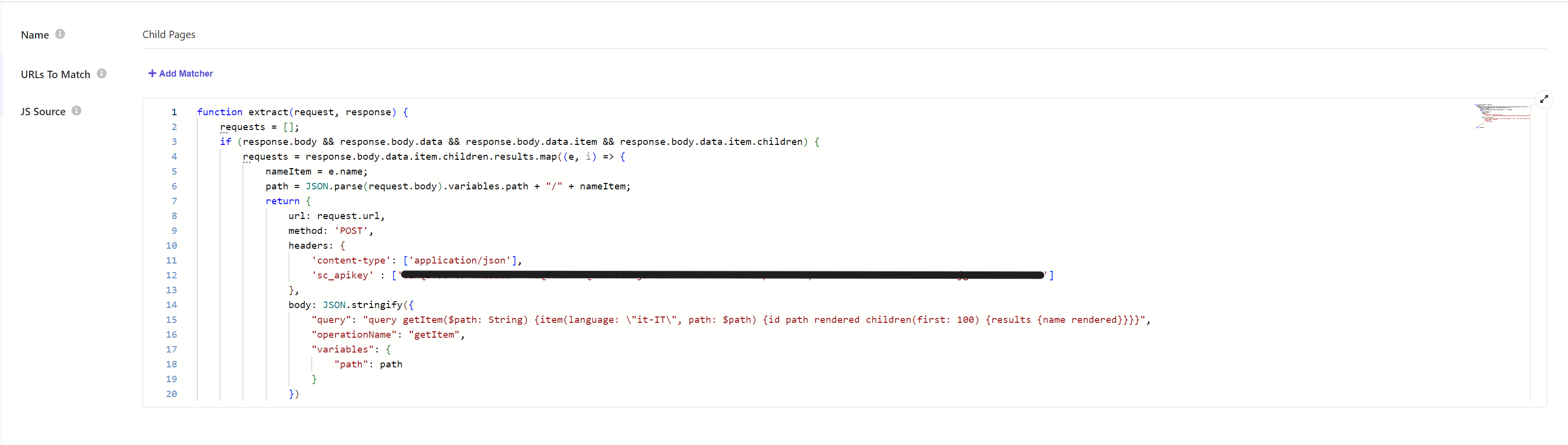

The next configuration step involves setting up Request Extractors. A request extractor generates an additional list of URLs for the crawler to index. If the crawler does not cover all the content needed for indexing by following the original trigger starting point, you can employ a request extractor. This extractor is a JavaScript function that leverages the trigger output as its input. To extract data from XMCloud Edge, we need to create a specific JavaScript function. Below I added a screenshot and code for my extractor.

function extract(request, response) {

requests = [];

if (response.body && response.body.data && response.body.data.item && response.body.data.item.children) {

requests = response.body.data.item.children.results.map((e, i) => {

nameItem = e.name;

path = JSON.parse(request.body).variables.path + "/" + nameItem;

return {

url: request.url,

method: 'POST',

headers: {

'content-type': ['application/json'],

'sc_apikey' : ['your key']

},

body: JSON.stringify({

"query": "query getItem($path: String) {item(language: \"en\", path: $path) {id path rendered children(first: 100) {results {name rendered}}}}",

"operationName": "getItem",

"variables": {

"path": path

}

})

};

});

}

return requests;

}

The extractor configuration includes several key fields: Name, URLs To Match, and JS Source. The URLs To Match field specifies the URL patterns to which the extractor rules apply, and it supports both regular expressions and glob expressions. However, for the purposes of this article, I opted to filter data through JavaScript instead.

The code example illustrates that my response should include response.body, response.body.data, response.body.data.item, and response.body.data.item.children. This JavaScript source was adapted from the Sitecore documentation to meet the specific requirements of my project. Additionally, it's crucial that the query body returns not only the content but also child items, enabling recursive navigation through our Sitecore tree via Edge queries.

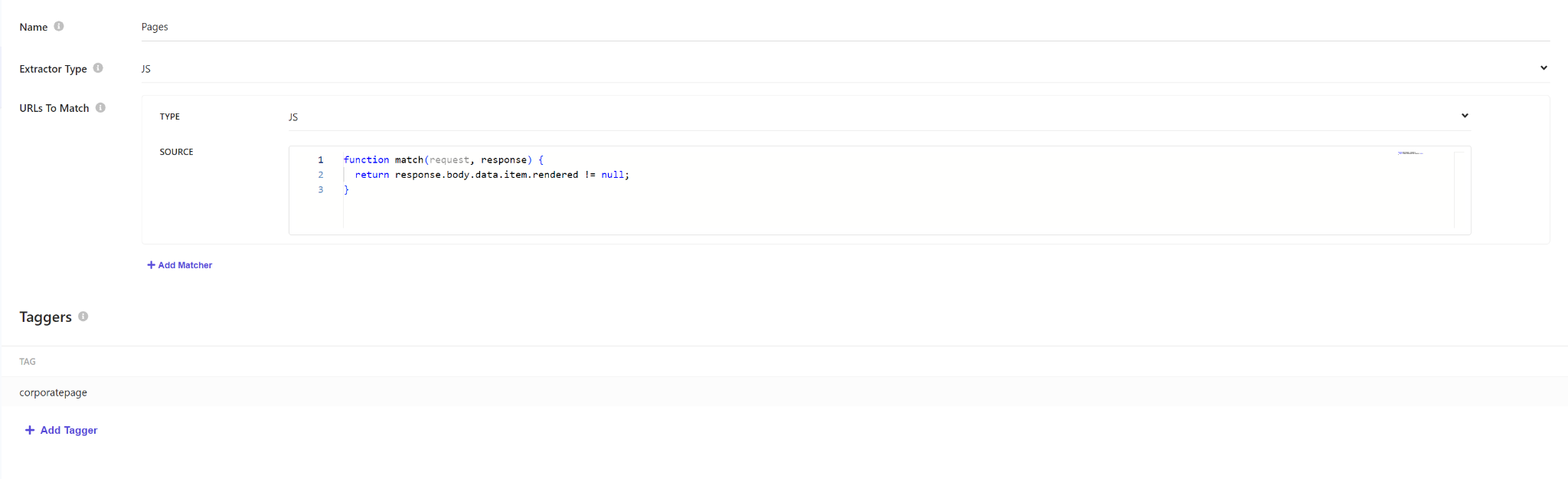

The final configuration step involves the Document Extractor. This setting is essential for mapping response data to entity fields. It includes several fields: Name, Extractor Type, URLs To Match, and Taggers. I selected the Extractor Type as JavaScript (JS) due to the challenges I faced with JsonPath. The JS type proved to be more intuitive and straightforward for my use case.

For URLs To Match, I also use JS type with code:

function match(request, response) {

return response.body.data.item.rendered != null;

}

This filter is designed to skip items that do not contain rendered data in the response. Naturally, you can implement additional conditions based on your specific requirements.

The core component of the Document Extractor is the Taggers section. This is where you map the response data to specific entities. At present, I am utilizing a single tagger. However, if your API crawler is configured for multiple entities, you will need to implement multiple taggers. Here is an example of my tagger code:

function extract(request, response) {

const data = response.body.data;

return [{

'id': data.item.id,

'type': data.item.rendered.sitecore.route.templateName,

'name': data.item.rendered.sitecore.route.name,

'title': data.item.rendered.sitecore.route.fields.Title.value,

'description': data.item.rendered.sitecore.route.fields.MetaDescription.value,

'url': data.item.rendered.sitecore.context.itemPath

}];

}

These are the essential settings required for configuring an API crawler from Edge. Additionally, the crawler includes advanced settings such as Authentication, Crawler Schedule, and Incremental Updates. While I haven't utilized these features yet, here is an overview:

-

Authentication: This setting enables the configuration of authentication for your queries if the API requires it.

-

Crawler Schedule: This setting facilitates scheduling the execution of your crawler.

-

Incremental Updates: This feature allows developers to use the Ingestion API to add or modify index documents, enabling more efficient and targeted updates to the index.

In conclusion, the API crawler is an excellent option for transferring data without the need for additional processing. While I did encounter some challenges when initially setting up the crawlers, I eventually discovered the correct configuration. Moreover, I am impressed with the efficiency and speed of this feature for transferring large volumes of data. However, one drawback I observed is that diagnosing issues can be challenging, as it is often difficult to pinpoint which settings are incorrect. I hope the Sitecore team will soon introduce log dashboards for Sitecore Search to improve troubleshooting and diagnostics.